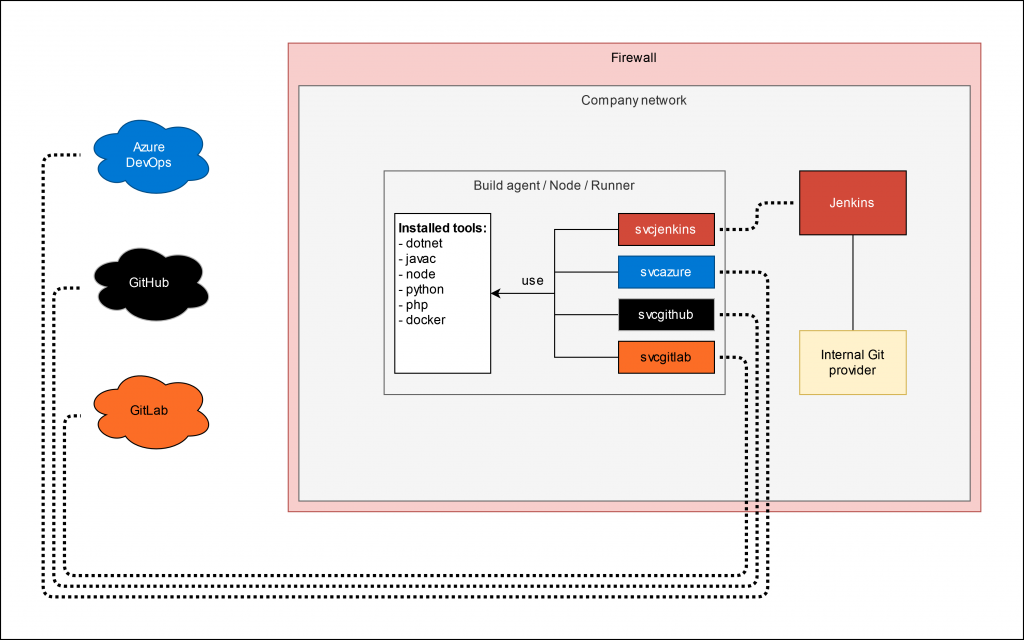

Assume you have a build agent that has a specific tool set installed. Let’s say certain compilers, build tools and configurations. Parts of the team work with Provider A (let’s say GitHub), others with Provider B (let’s say GitLab) and a third part works with the internal Git repositories and a locally installed Jenkins instance. The whole setup is running in a company network that is secured by a firewall and looks basically like the following diagram shows.

The basic principle here is, that the agents connect to the service and thus from the network to the cloud provider and not the other way round. This way there is no route through the firewall required and as soon as the agent is up and running it connects to the cloud provider and appears as “online”.

Advantages

- All tools available and maintenance is only required once.

- Each service runs as separate user and thus access to external entities can be managed (i.e. SSH access via public key)

- Separate workspaces and access for analysis can be granted per team

- Can be applied to Windows and Linux build agents

- Per provider configuration is possible on local home directory usage (local toolset)

- Scalable: Just clone the agent, change host name and connect to providers

- Access to internal resources is possible for publishing and deployment for example

Disadvantages

- Single point of failure

- Performance can be affected when all systems run builds at the same time.

Challenges

- Versioning for compilers when static compilers are required in the future (i.e. for firmware compilation on specific hardware) – Can be solved with Docker.

Conclusion

This article only scratches the surface of the topic but it shows that it is very easy to optimize usage of existing resources by connecting different cloud providers and thus allowing teams to work in their known environments. Additionally the ops team can manage the agents and keep the tools updated.